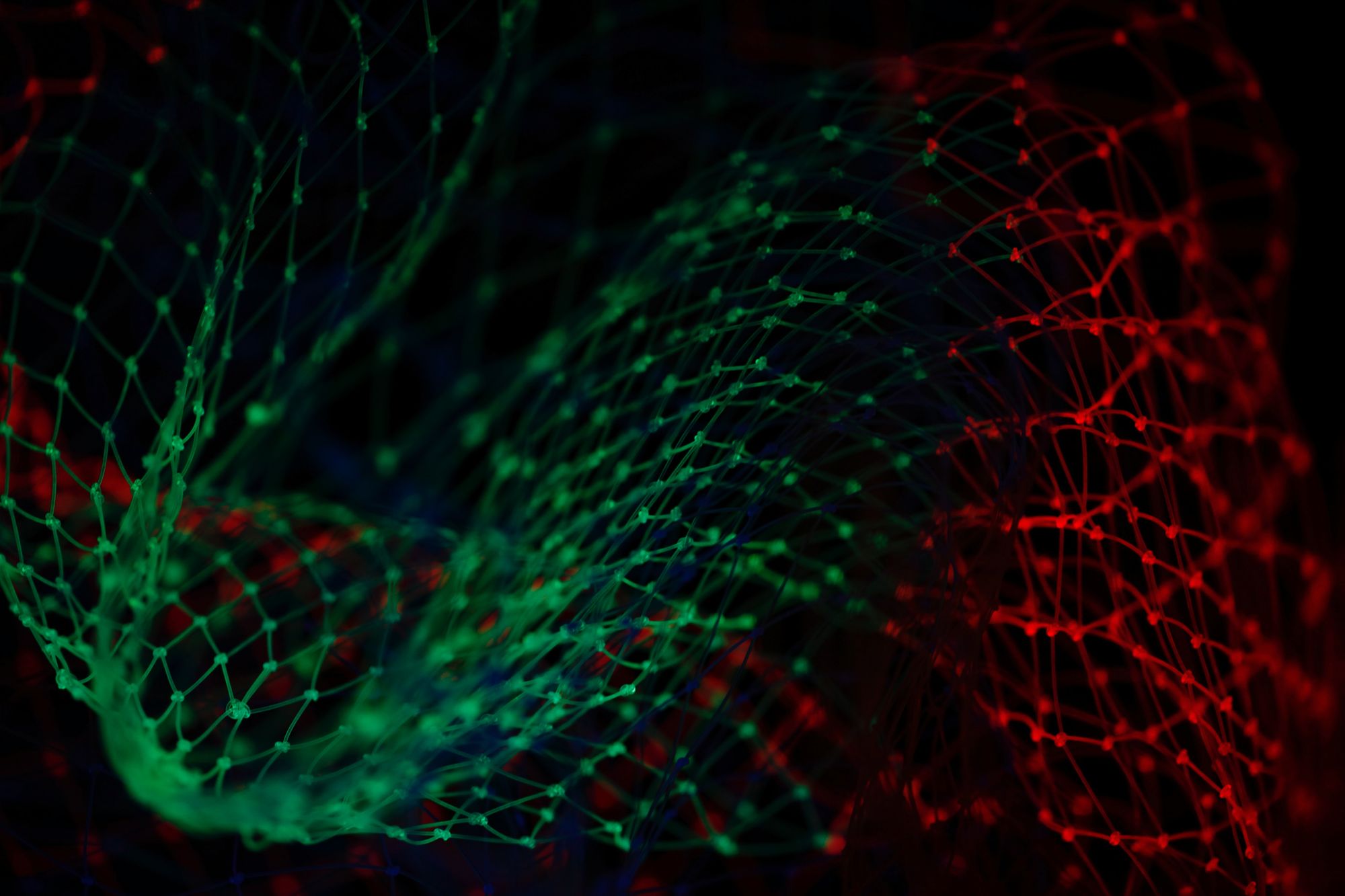

One of the most impressive/controversial papers from 2020 was GPT-3 from OpenAI. It’s nothing particularly new, it’s mostly a bigger version of GPT-2, which came out in 2019. GPT-3 is a much bigger version, being by far the largest machine learning model at the time it was released, with 175 billion parameters.

It’s a fairly simple algorithm: it’s learning to predict the next word in a text. It learns to do this by training on several hundred gigabytes of text gathered from the Internet. Then to use it, you give it a prompt (a starting sequence of words) and then it will start generating more words. Eventually it will decide to finish the text by emitting a stop token.

Using this seemingly stupid approach, GPT-3 is capable of generating a wide variety of interesting texts: it can write poems (not prize winning, but still), write news articles, imitate other well know authors, make jokes, argue for it’s self awareness, do basic math and, shockingly to programmers all over the world, who are now afraid the robots will take their jobs, it can code simple programs.

That’s amazing for such a simple approach. The internet was divided upon seeing these results. Some were welcoming our GPT-3 AI overlords, while others were skeptical, calling it just fancy parroting, without a real understanding of what it says.

I think both sides have a grain of truth. On one hand, it’s easy to find failure cases. It’s easy to make it say things like “a horse has five legs”, showing it doesn’t really know what a horse is. But are humans that different? Think of a small child who is being taught by his parents to say “Please” before his requests. I remember being amused by a small child saying “But I said please” when he was refused by his parents. The kid probably thought that “Please” is a magic word that can unlock anything. Well, not really, in real life we just use it because society likes polite people, but saying please when wishing for a unicorn won’t make it any more likely to happen.

And it’s not just little humans who do that. Sometimes even grownups parrot stuff without thinking about it, because that’s what they heard all their life and they never questioned it. It actually takes a lot of effort to think, to ensure consistency in your thoughts and to produce novel ideas. In this sense, expecting an artificial intelligence that is around human level might be a disappointment.

On the other hand, I believe there is a reason why this amazing result happened in the field of natural language processing and not say, computer vision. It has been long recognized that language is a powerful tool, there is even a saying about it: “The pen is mightier than the sword”. Human language is so powerful that we can encode everything that there is in this universe into it, and then some (think of all the sci-fi and fantasy books). More than that, we use language to get others to do our bidding, to motivate them, to cooperate with them and to change their inner state, making them happy or inciting them to anger.

While there is a common ground in the physical world, often times that is not very relevant to the point we are making: “A rose by any other name would smell as sweet”. Does it matter what a rose is when the rallying call is to get more roses? As long as the message gets across and is understood in the same way by all listeners, no, it doesn’t. Similarly, if GPTx can affect the desired change in it’s readers, it might be good enough, even if doesn’t have a mythical understanding of what those words mean.