We saw that machine learning algorithms process large amounts of data to find patterns. But how exactly do they do that?

The first step in a machine learning project is establishing metrics. What exactly do we want to do and how do we know we’re doing it well?

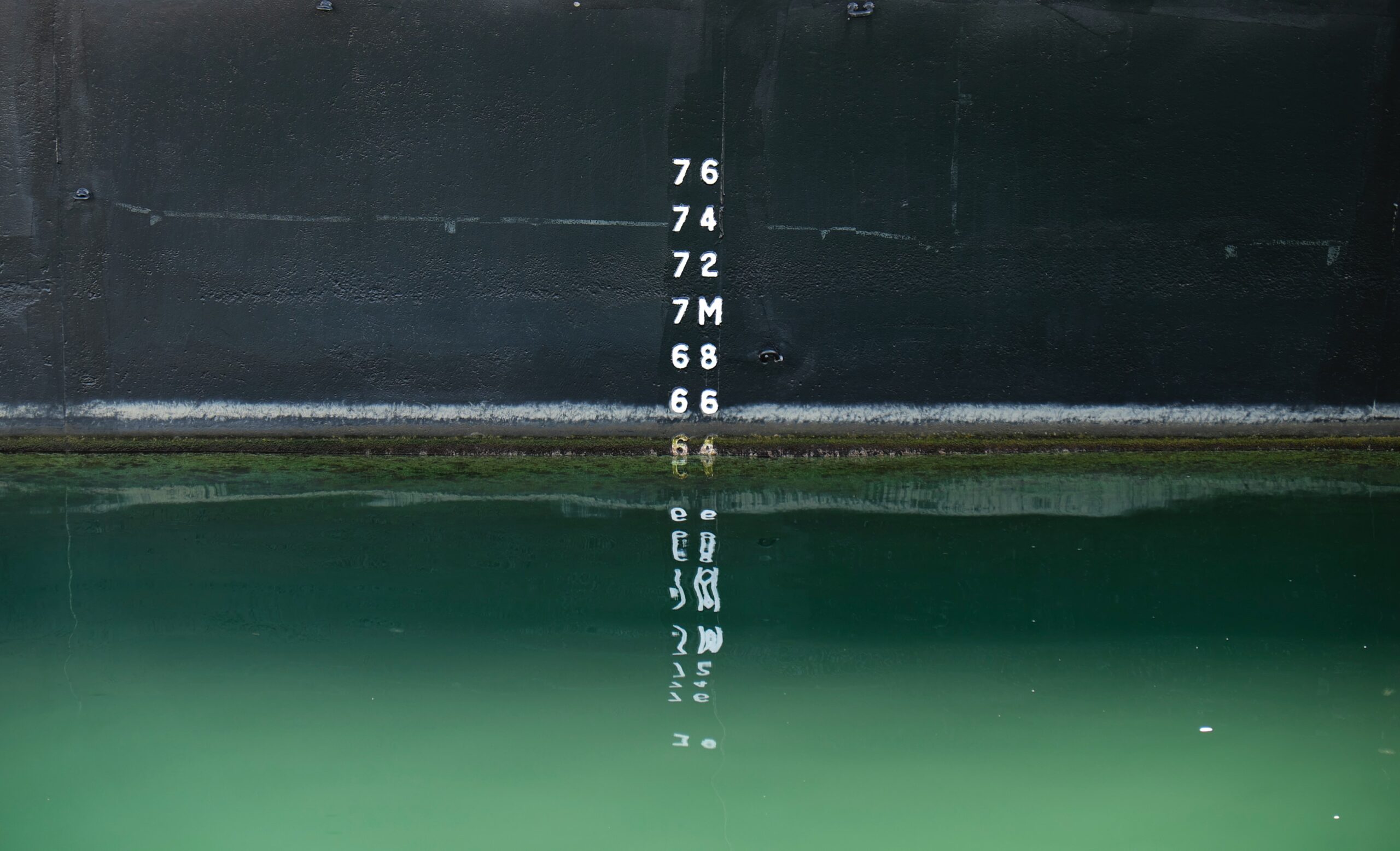

Are we trying to predict a number? How much will Bitcoin cost next year? That’s a regression problem. Are we trying to predict who will win the election? That’s a binary classification problem (at least in the USA). Are we trying to recognize objects in an image? That’s a multi class classification problem.

Another question that has to be answered is what kind of mistakes are worse. Machine learning is not all knowing, so it will make mistakes, but there are trade-offs to be made. Maybe we are building a system to find tumors in X-rays: in that case it might be better that we call wolf too often and have false positives, rather than missing out on a tumor. Or maybe it’s the opposite: we are trying to implement a facial recognition system. If the system recognizes a burglar incorrectly, then the wrong person will get sent to jail, which is a very bad consequence for a mistake made by “THE algorithm”.

These are not just theoretical concerns, but they actually matter a lot in building machine learning systems. Because of this, many ML projects are human-in-the-loop, meaning the model doesn’t decide by itself what to do, it merely makes a suggestion which a human will then confirm. In many cases, that is valuable enough, because it makes the human much more efficient. For example, the security guard doesn’t have to look at 20 screens at once, but can only look at the footage that was flagged as anomalous.

Tomorrow we’ll look at the next step: gathering the data.