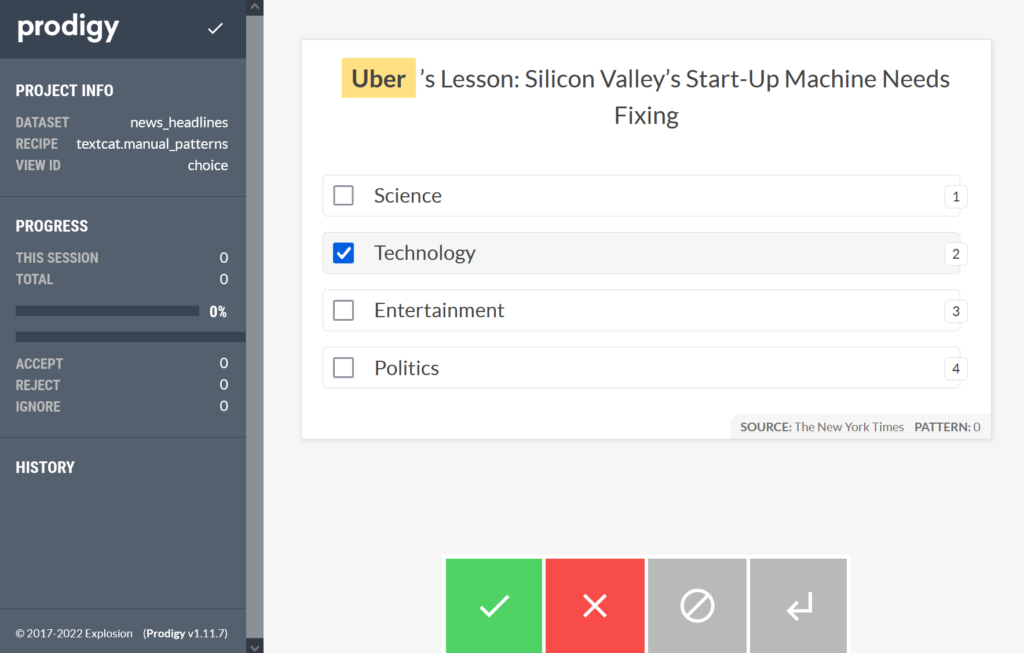

Let’s take a look at how to do multi label text classification with Spacy. In multi label text classification each text document can have zero, one or more labels associated with it. This makes the problem more difficult than regular multi-class classification, both from a learning perspective, but also from an evaluation perspective. Spacy offers some tools to make that easy.

spaCy

Spacy is a great general purpose NLP library, that can be used out of the box for things like part of speech tagging, named entity recognition, dependency parsing, morphological analysis and so on. Besides the built-in modules, it can also be used to train custom models, for example for text classification.

Spacy is quite powerful out of the box, but the documentation is often lacking and there are some gotchas that can prevent a model from training, so below I am writing a simple guide to train a simple multi label text classification model with this library.

Training data format

Spacy requires training data to be in its own binary data format, so the first step will be to transform our data into this format. I will be working with the lex_glue/ecthr_a dataset in this example.

First, we have to load the dataset.

from datasets import load_dataset

dataset = load_dataset("lex_glue", 'ecthr_a')

print(dataset)

print(dataset['train'][0])

Which will output the following:

DatasetDict({

train: Dataset({

features: ['text', 'labels'],

num_rows: 9000

})

test: Dataset({

features: ['text', 'labels'],

num_rows: 1000

})

validation: Dataset({

features: ['text', 'labels'],

num_rows: 1000

})

})

{'text': ['11. At the beginning of the events relevant to the application, K. had a daughter, P., and a son, M., born in 1986 and 1988 respectively. P.’s father is X and M.’s father is V. From March to May 1989 K. was voluntarily hospitalised for about three months, having been diagnosed as suffering from schizophrenia. From August to November 1989 and from December 1989 to March 1990, she was again hospitalised for periods of about three months on account of this illness. In 1991 she was hospitalised for less than a week, diagnosed as suffering from an atypical and undefinable psychosis. It appears that social welfare and health authorities have been in contact with the family since 1989.',

'12. The applicants initially cohabited from the summer of 1991 to July 1993. In 1991 both P. and M. were living with them. From 1991 to 1993 K. and X were involved in a custody and access dispute concerning P. In May 1992 a residence order was made transferring custody of P. to X.',

....

'93. J. and M.’s foster mother died in May 2001.'],

'labels': [4]}

The dataset comes with a train, validation and test split. The documents themselves are split into multiple paragraphs and the labels are just integers, not the actual string descriptions of labels. The actual labels are:

labels = ["Article 2", "Article 3", "Article 5", "Article 6", "Article 8", "Article 9", "Article 10", "Article 11", "Article 14", "Article 1 of Protocol 1"]

To transform a single document into the DocBin format, we have to parse the combined paragraphs with Spacy and add all the labels to the document. The parsing we do here is not very important, so we can use the smallest English model from Spacy.

import spacy

nlp = spacy.load("en_core_web_sm")

d = dataset['train'][0]

text = "\n\n".join(d['text'])

doc = nlp(d)

for l in labels:

if l in d['labels']:

doc.cats[l] = 1

else:

doc.cats[l] = 0

print(doc[:10])

print(doc.cats)

Which will output:

11. At the beginning of the events relevant

{'Article 2': 0, 'Article 3': 0, 'Article 5': 0, 'Article 6': 0, 'Article 8': 0, 'Article 9': 0, 'Article 10': 0, 'Articl

e 11': 0, 'Article 14': 0, 'Article 1 of Protocol 1': 0}

One gotcha that I ran into was that you have to specify all the labels for each document (unlike with Fasttext): the ones that are for this document with “probability” 1, and the ones that are not applied with “probability” 0. Spacy won’t give any errors (unlike scikit-learn) if you don’t do this, but the model will not train and you will always get an accuracy of 0.

The above snippet can be made more efficient by using the built-in pipeline from Spacy, which processes documents in batches, but we will have to go over the documents twice, once to build up the list of joined paragraphs (which Spacy can process) and once to add the labels.

from spacy.tokens import DocBin

from tqdm import tqdm

for t, o in [(dataset['train'], "ecthr_train.spacy"), (dataset['test'], "ecthr_dev.spacy")]:

db = DocBin()

docs = []

cats = []

print("Extracting text and labels")

for d in tqdm(t):

docs.append("\n\n".join(d['text']))

cats.append([labels[idx] for idx in d['labels']])

print("Processing docs with spaCy")

docs = nlp.pipe(docs, disable=["ner", "parser"])

print("Adding docs to DocBin")

for doc, cat in tqdm(zip(docs, cats), total=len(cats)):

for l in labels:

if l in cat:

doc.cats[l] = 1

else:

doc.cats[l] = 0

db.add(doc)

print(f"Writing to disk {o}")

db.to_disk(o)

Generating the model config

Spacy has it’s own config system for training models. You can generate a config with the following command:

> spacy init config --pipeline textcat_multilabel config_efficiency.cfg

Generated config template specific for your use case

- Language: en

- Pipeline: textcat_multilabel

- Optimize for: efficiency

- Hardware: CPU

- Transformer: None

✔ Auto-filled config with all values

✔ Saved config

config_eff.cfg

You can now add your data and train your pipeline:

python -m spacy train config_effiency.cfg --paths.train ./train.spacy --paths.dev ./dev.spacy

By default, it uses a simple bag of words model, but you can set it to use a bigger convolutional model:

spacy init config --pipeline textcat_multilabel --optimize accuracy config.cfg

One thing that I usually change in the generated config is the logging system. I either enable the Weight and Biases configuration (which requires wandb to be installed in the virtual environment) or at least enable the progress bar:

[training.logger]

@loggers = "spacy.ConsoleLogger.v1"

progress_bar = true

You can modify any of the hyperparameters of the pipeline here, such as optimizer type or the ngram_size of the model, which is 1 by default (and I usually increase it to 2-3).

Another thing you can set here is how should Spacy determine the best model at the end of training. You can weight the different metrics: micro/macro recall/precision/f1 scores. By default it looks only at the F1 score. Setting this depends very much on what problem you are trying to solve and what is more important from a business perspective.

Training the model

Spacy makes this super simple:

> spacy train config_effiency.cfg --paths.train ./ecthr_train.spacy --paths.dev ./ecthr_dev.spacy -o ecthr_model

ℹ Saving to output directory: ecthr_model

ℹ Using CPU

=========================== Initializing pipeline ===========================

[2022-09-15 11:24:46,510] [INFO] Set up nlp object from config

[2022-09-15 11:24:46,519] [INFO] Pipeline: ['textcat_multilabel']

[2022-09-15 11:24:46,528] [INFO] Created vocabulary

[2022-09-15 11:24:46,529] [INFO] Finished initializing nlp object

[2022-09-15 11:26:41,921] [INFO] Initialized pipeline components: ['textcat_multilabel']

✔ Initialized pipeline

============================= Training pipeline =============================

ℹ Pipeline: ['textcat_multilabel']

ℹ Initial learn rate: 0.001

E # LOSS TEXTC... CATS_SCORE SCORE

--- ------ ------------- ---------- ------

0 0 0.25 53.31 0.53

0 200 18.69 54.04 0.54

0 400 16.42 53.91 0.54

0 600 15.38 53.36 0.53

0 800 15.10 54.18 0.54

0 1000 14.22 54.17 0.54

0 1200 14.58 53.56 0.54

0 1400 15.57 55.64 0.56

0 1600 14.29 56.39 0.56

0 1800 15.79 56.68 0.57

0 2000 13.49 57.56 0.58

0 2200 14.21 56.86 0.57

0 2400 17.43 57.06 0.57

0 2600 15.71 58.51 0.59

0 2800 13.17 56.02 0.56

0 3000 14.36 57.86 0.58

0 3200 17.20 58.35 0.58

0 3400 14.84 57.91 0.58

0 3600 14.22 56.84 0.57

0 3800 17.36 59.81 0.60

0 4000 15.39 54.60 0.55

0 4200 12.04 58.29 0.58

0 4400 12.85 58.35 0.58

0 4600 12.25 58.71 0.59

0 4800 14.68 59.31 0.59

0 5000 18.53 59.00 0.59

0 5200 13.58 59.54 0.60

0 5400 16.04 58.90 0.59

✔ Saved pipeline to output directory

ecthr_model\model-last

And now we have two models in the ecthr_model folder: the last one and the one that scored best according to the metrics defined in the config file.

Using the trained model

To use the model, load it in your inference pipeline and use it like any other Spacy model. The only difference will be that the resulting Doc object will have the cats attribute filled with the predictions for your multilabel classification problem.

import spacy

nlp = spacy.load("ecthr_model/model-best")

d = nlp(text)

print(d.cats)

{'Article 2': 0.3531339466571808,

'Article 3': 0.2542854845523834,

'Article 5': 0.34043481945991516,

'Article 6': 0.4782226085662842,

'Article 8': 0.450054407119751,

'Article 9': 0.45071953535079956,

'Article 10': 0.3821248412132263,

'Article 11': 0.5566793084144592,

'Article 14': 0.47893860936164856,

'Article 1 of Protocol 1': 0.3836081027984619}

The output is the probability for each class. The model was trained with a threshold of 0.5, so it would consider only “Article 11” to be applied to this document, but you can choose a different threshold if you want a different precision/recall balance.

Cons of Spacy

- Training is slow. Even the efficient architecture, which uses an n-gram bag of words model (with a linear layer on top, I guess) trains in half an hour. In contrast, scikit-learn can train a logistic regression in minutes.

- Documentation has gaps: you often have to dig into the source code of Spacy to know exactly what is going on. And searching the internet is not always helpful, because there are many outdated answers and tutorials, which were written for previous versions of Spacy and are no longer relevant.

Conclusion

Spacy is another library that can be used to start training text classification models. It’s particularly great if you are already using it for some of the other things it provides, because then you need fewer dependencies and that can simplify your model maintenance and deployment.